The EU Artificial Intelligence Act calls for AI transparency in a wide variety of sectors. This is not only because specific AI systems can compromise our security und fundamental rights — it would also otherwise be difficult for the responsible enforcement agencies to inspect compliance with the existing regulations. The legal uncertainty that results from this could lead to citizens and businesses losing their trust in AI. Consequently, there would be a reluctance to utilize AI technologies as well as regulatory encroachments by authorities.

As countermeasures, the EU Commission has recommended the following:

- guaranteed security for AI systems,

- legal certainty for AI providers and

- targeted market development for reliable AI in order to a create a global competitive advantage for the European industry.

For high-risk AI applications (between approximately 5% und 15% of all AI applications) there will be binding requirements. However, a large portion of EU citizens believe that these standards should not be limited to this small share of AI systems.

The EU Commission aims to achieve the above goals through

- new documentation requirements,

- the traceability and

- transparency of AI systems.

According to European Commission estimates, the costs for fulling the legal requirements for high-risk AI, along with the inspection costs, will amount to up to 10% of investments. The same would also certainly apply to businesses, such as those that, for product security and product liability reasons, voluntarily follow corresponding codes of behavior.

AI in a nutshell

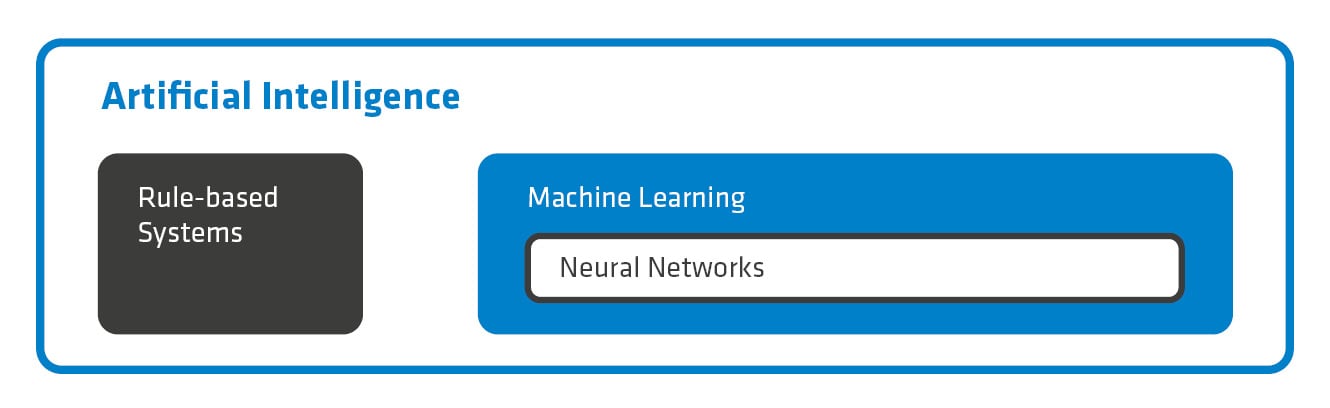

Everyone is talking about the term “artificial intelligence,” which is synonymous with “AI systems” and “AI” — but what exactly does it mean? It is an umbrella term for IT systems that attempt to imitate the human use of intelligence. In other words: thinking. There are two different approaches with regard to its implementation: rule-based systems and machine learning (see Figure 1).

Rule-based systems only use predetermined rules, which is why their mode of operation can generally be predicted beforehand. In the case of machine learning, on the other hand, dynamic algorithms are used that independently learn something new through the approximation of correlations. This is why the behavior of these systems is essentially unpredictable. There are many ways in which they can be implemented. Among these, neural networks, due to their simplicity, have become highly prevalent.

Figure. 1: Both rule-based systems and machine learning are the fundamental methods of Artificial Intelligence. One version of machine learning is neural networks.

Figure. 1: Both rule-based systems and machine learning are the fundamental methods of Artificial Intelligence. One version of machine learning is neural networks.

Comprehensive rules

It is not only at the EU level that lawmakers are compelled to regulate AI. In Germany as well, in areas such as the financial sector, the influence of Artificial Intelligence is becoming correspondingly noticeable. This is in spite of the fact that the Federal Financial Supervisory Authority (BaFin), during the assessment of its supervisory activity with regard to AI systems, came to the conclusion that no essential changes to their operational procedures were required. The comprehensive rules for inspection and approval are formulated in such a way that is technology-neutral, and the requirements consist of principles that are always interpreted in a case-specific way. As a result, the supervisory practice rests on a solid foundation.

However, the fact that neural networks essentially behave like a black box is significant. In addition to this, there is also the growing complexity as well as the dimensionality of the models. In order to keep up with this development, the desire to reproduce this model must be renounced. Instead, the “explainability” of its behavior comes into focus. This is why the FFSA has required modelers to provide a plausible justification for any worsening of their model’s explainability.

The ability to self-optimize Artificial Intelligence presents another supervisory challenge. As a result, changes to the models must be reported, and they may, if necessary, only be put back into operation following approval. For this reason, self-made changes to AI systems should be thoroughly examined and documented.

A record for decision-making

An important goal is distilled from all of this: transparency for AI producers. Recording the chronological sequence of activities carried out by AI neurons, along with their reciprocative circuity, would only be an apparent solution. Though a measurability is produced as a result, it is far from explainability.

Another unsatisfying approach is black box analyses, such as Local Interpretable Model-Agnostic Explanations (LIME). They can only help locally, however, and cannot comprehend the full complexity of AI. What is really needed is a clear documentation of decisions made by AI systems. Similar to the causal chain in a judicial decision, the AI’s mode of operation must be comprehensible in every step.

We presented a suitable solution in Think! 2021: With the Rule-Based Neural Networks (RBNN) approach, we came out of the adesso SE innovation competition as the winner. RBNN emerges through the combination of opposing Artificial Intelligence methods into a symbiosis: rule-based systems und neuron networks. As a result, the AI automatically includes a report on its decision-making process for every given answer. Furthermore, a self-learning AI system emerges because of the fact that the report can be directly looped back into the neural network.

The dream of infallible AI

However, Artificial Intelligence is not automatically freed from uncertainties through the use of RBNN. Even this AI solution is based on structures that have been predetermined by humans and on predetermined queries that were edited by humans using input data arranged by humans. If the input data itself is inconsistent or the if the constructed rules enable a misinterpretation, a system can derive several contradictory statements, and as a result lose its clarity.

However, an unconsidered correlation, such as the lost validity of a reasoning, can even lead to the loss of coherence. This is why it must also be assumed for RBNN that they can develop and intensify a propensity to specific “thought patterns.”

Assuming that there are several possible answers to a question in an AI, and to every question this AI can present a coherent line of argument, then the explainable AI could also “select” an answer. This is no different in human interactions, such as in the field of science: When several researchers discuss a question that needs to be interpreted, one often receives various statements in response — this happens even though all of their reasonings are based on the same facts and are inherently coherent. Though something like objectivity can emerge from explainability, it is not possible for us to find the truth in this way.

Into the future with Rule-Based Neural Networks

Artificial intelligence is definitely one of — if not the IT technology that currently has the greatest potential for application. It recognizes fraud, helps people with their work, and sorts emails. Its areas of use include customer service, maintenance, and decision-making. Many of its future possible uses are still probably not known at all.

Yet however advantageous it may be to use AI systems, they are nevertheless still a cause of much unease among manufacturers, operators, and among the population. Whether it is service providers, producers or customers — many are skeptical of the new technologies. This not only has consequences at a European level, where the AI Act demands, among other things, transparency for AI systems. German authorities present additional challenges, as the FFSA example illustrates, with regard to competence and responsibility in the production and application of AI.

Anyone who early on makes their AI suitable for the future is safe from surprises that threaten to emerge during the use of non-transparent AI systems. With transparent AI, one has the authorities and customers on their side. Since RBNN can produce the desired transparency, it is worth becoming more familiar with it. Better late than never.

You want to learn more about KI in the insurance sector? Then please do not hesitate to get in touch with Karsten Schmitt, Head of Business Development at adesso insurance solutions.